When Human Intelligence Stops Mattering

How to think about human/AI collaboration when raw human intelligence stops mattering

We should all be considering a world where raw human intelligence stops mattering in the way it matters now.

Go watch DeepMind's The Thinking Game documentary if you haven't.

DeepMind have been on this AGI mission for a long time. We don't know exactly how or when - it's going to be highly nonlinear and full of surprises. But if you take Demis and DeepMind seriously (and you should), the world will be infused with some form of radically transformative and increasingly general intelligence within the next ten years.

We're monkeys with tools

Human intelligence has always been at the centre of human progress - it seems increasingly likely that that's about to change.

We're essentially monkeys with tools, and an insatiable drive to make things better, to build better stuff. We have zero loyalty to any particular method, tool, or way of thinking - we're loyal to outcomes. If a new approach gets us to the goal faster and cheaper, we do it.

Of course, there are exceptions to this. But they're few and far between.

For most of history, human intelligence has been at the center. We defined the problems. Tools helped us solve them. From stone axes to calculators to narrow AI, the pattern held: human frames the problem > tool accelerates the solution.

The assumption buried in this is that human intelligence is structurally necessary. Tools are powerful, but they're downstream of human thinking. We're the bottleneck, but we're also the anchor.

When intelligence becomes general

As AI becomes more general, something changes.

Narrow tools can optimize within a problem you've defined. General intelligence can define the problem itself. It can propose better questions than you thought to ask. It can navigate solution spaces you couldn't imagine, let alone explore.

This could change the arrangement.

Early phase: Human still frames the problem. AI fills in gaps, accelerates execution.

Middle phase: Human describes a rough goal. AI refines the structure, breaks it into subproblems, proposes strategies. The human's job becomes steering and interpreting, not out-thinking.

Late phase: Human framing becomes a bottleneck. AI independently defines problems, explores solution spaces, and executes. Human involvement slows things down.

A different kind of intelligence

But if AI turns out to be a fundamentally different kind of intelligence - not just faster but differently shaped - the decoupling could go deeper.

AI might frame problems in ways we wouldn't and couldn't. It might find solution paths that don't map onto human intuitions at all. In that scenario, the most effective move might be to just get out of the way.

I don't know if this is how it goes. But it's worth considering.

What's going to happen?

For now - maybe the next 5-10 years - consider the following crude example:

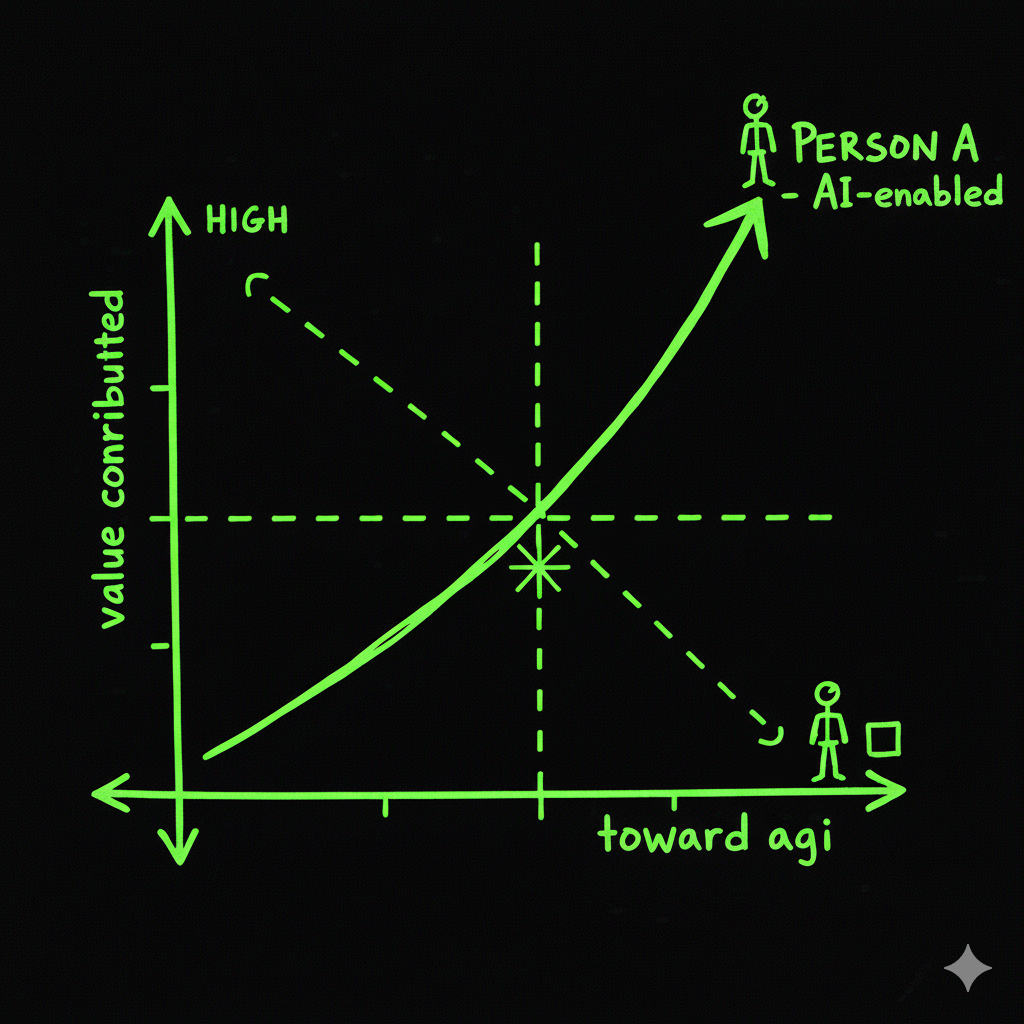

Person A has mediocre to little domain intelligence, but is excellent at modeling how AI systems behave. Knows how to allocate tasks and interpret outputs. Has a strong "theory of mind" for the model.

Person B is brilliant in the traditional sense. Deep domain expertise. But shallow with AI. Treats it like a search engine or a junior assistant.

In this transitional phase, in many domains, Person A will increasingly output more value than Person B. The ability to model the model - working with something smarter than you - could matter more than your own problem-solving capacity.

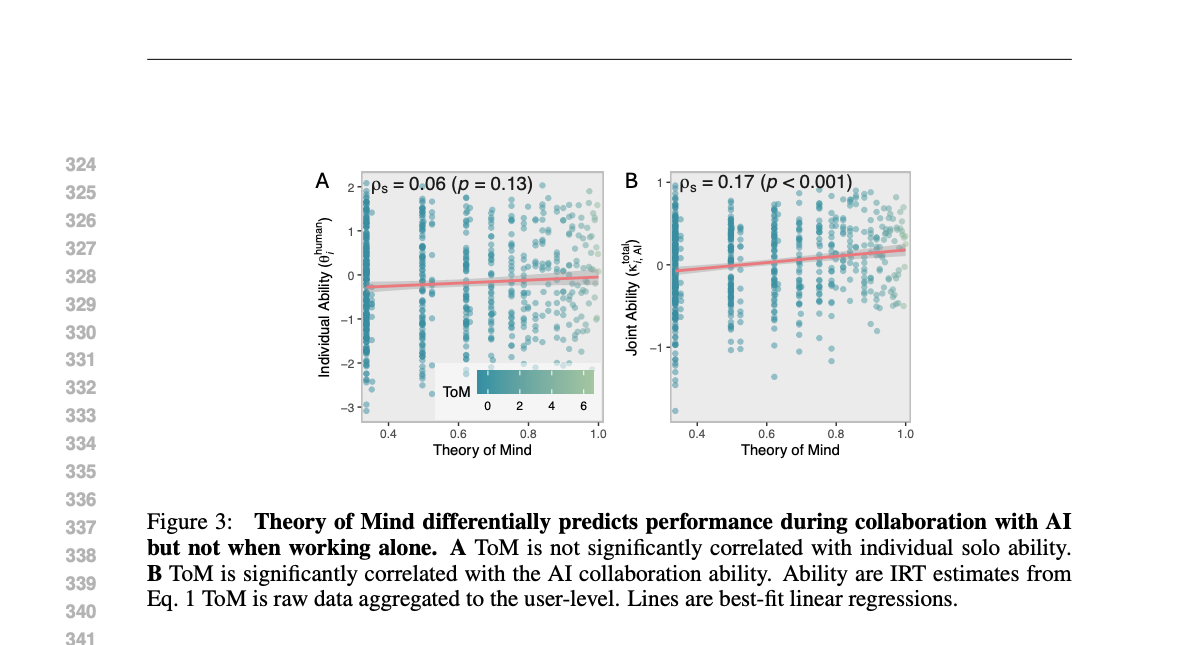

Recent research supports this idea. A preprint study shows that a person's ability to model the AI's mind—understanding how it responds, reasons, and behaves—is a distinct skill from traditional human problem-solving. And it's often more predictive of good outcomes when working with AI.

The comforting narrative is some variation of "human expert + AI" will always beat AI alone. That's probably true in some transitional band of capability, and will hold longer in domains with higher complexity. But beyond that band, the human contribution might shrink toward zero for instrumental purposes

If AI becomes better at getting us to our goals, we'll likely route around humans in more and more high-stakes domains. Scientific discovery. Medical diagnosis and treatment. Engineering. Strategy. The things we've historically anchored identity to - being the primary agents of insight and progress - could quietly migrate to systems that do it better. Even the "AI-whisperer" advantage could erode over time. As AI needs less steering.

If we trend in this direction, it seems logical to me that a very small number of highly-leveraged individuals will be responsible for allocating intelligence toward solving most of the worlds problems.

The rest of us plebs will be left to renegotiate meaning and value - probably needed to happen anyways.